As virtual reality advances, the various fields that it can be applied to and enhance are seemingly endless; This can be applied to enhance classrooms by transporting a class of students to a place they would not be able to physically attend and being able to use 360° resources to raise engagement in their learning (King-Thompson, 2017). It can also or be applied to the entertainment sector, such as Yosemite VR cinema, the first permenant VR cinema in the United States (Sheremetov and Slesar, 2023).

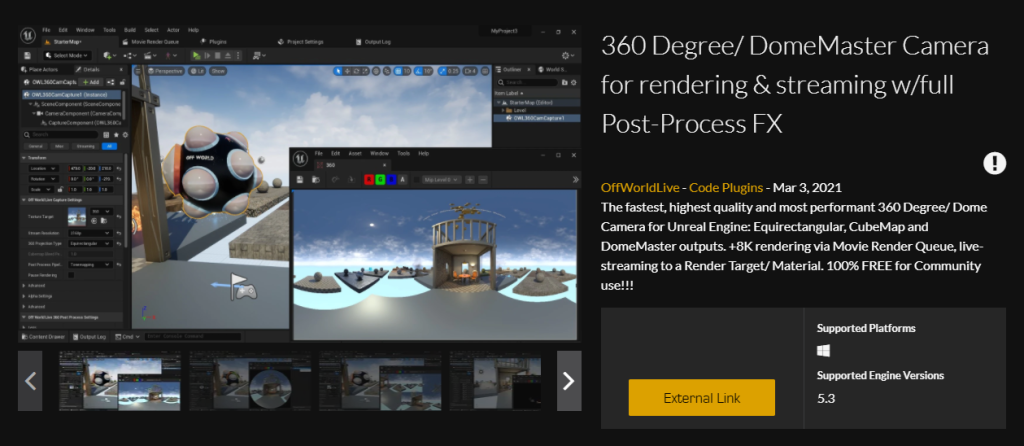

For my Emerging Technologies research project, I am planning to create a 360° video with the use of Unreal Engine 5.2, as this allows for me to make use of the Nanite and Lumen systems available in the engine before rendering out at 4K resolution, creating a highly detailed and visually interesting experience. I will be using Off World Live’s 360 rendering plugin from the Unreal Marketplace to ensure that the frames captured are at a 4K resolution. The project will be a stationary experience, feature 3 degrees of freedom for the viewer and spatial audio that will included in the post production stage of the project.

THE PROJECT

For this project, I am going to create a 360° video using the Unreal Engine which will place the viewer within a cityscape inspired by the cyberpunk genre. I chose to use Unreal Engine 5 over using software such as Unity purely due to my experience with the software – I know that it is possible to create 360° videos in Unity, yet I am extremely unfamiliar with Unity and its interface, which would only restrict and hinder what I plan to make for this project. Additionally, I had experimented with the possibility of using Maya for this project, given that I already have experience with creating a 360 video within the software as a part of this module, yet ultimately decided that using Unreal Engine would allow for a higher detailed final product in comparison. Furthermore, the time taken to render at a 4K resolution between Unreal Engine and Maya is significantly different, further solidifying my choice to use Unreal Engine 5.2.

My inspiration for this project comes from my personal inclination for dystopian games and films, such as the aforementioned cyberpunk genre. Games such as Cyberpunk 2077 (CDPROJEKTRED, 2020), Deus Ex: Human Revolution (Eidos Montreal, 2013) and Cloudpunk (ION LANDS, 2020), which all have varying elements of the cyberpunk genre are sources of inspiration for how I plan this experience to look visually. I plan on using neon lights sparingly and at a distance throughout the experience, as when wearing a head mounted display, bright lights may cause eye strain for the viewer if exposed for a longer period of time (Ayaga, 2023).

I find that the dystopian genre can be expanded on in a variety of ways, which can then be utilised to create an engaging virtual reality experience. As it is entirely fictional, and my project is primarily for entertainment purposes, there is a multitude of directions that I can take this project creatively; As it’s not a recreation of a place in the real world or a famous painting, I am not limited in what I can create.

An additional feature that I will be using is Off World Live’s 360° virtual camera for Unreal Engine, a free plugin that is on the Unreal Marketplace, rather than using the built in plugin. Through testing, I found that Off World’s plugin allows for high quality renders to be captured in Unreal Engine in a relatively short amount of time. When testing and experimenting with the plugin, I was able to render 180 frames of a highly detailed Unreal level at a 4K resolution in 10 minutes. The version of the plugin that I will be using is “v.0.209.2” for Unreal Engine 5.2.

Unreal Engine’s built in plugin for 360° rendering has very minimal instructions from the Unreal documentation and had some issues when I was testing it with staff. An example of this is how when rendering a sequence, the plugin had issues with the naming conventions, which then required further work and using code to fix, which would potentially impact my schedule if I continued to use this method. Moreover, when testing with staff, using Unreal’s built in plugin was taking an extremely long time to render out frames from a scene that had very little detail in it. In comparison, the longest I had to wait whilst experimenting with this plugin was 10 minutes for 4K quality with a highly detailed scene, filled with particle systems and repeating high quality textures throughout.

ETHICAL CONSIDERATIONS AND PLANNING THE USER EXPERIENCE

In my project, I plan on using some holographic advertisements to make this fantastical city feel alive, bustling and lived in by a wide range of people, making advertisements for fictional companies to make this world feel alive and much bigger than what the viewer can see from their position in the scene. With this inclusion, however, comes the ethical consideration regarding advertising in a virtual reality space. As this experience is for entertainment, I plan on starting the video as a black screen and stating that the advertisments are not real, allowing for transparency to the viewer and avoiding any potential deception that might arise from this.

This experience will use 3 degrees of freedom, allowing for the viewer to look around the space freely in all directions, yet not traverse the space. This makes it more accessible, as the video can be viewed through a wide range of devices, from mobile phones, to desktop PC’s and in virtual reality headsets, allowing for more people to experience the video regardless of the hardware that they have. Furthermore, this allows for people to use the experience regardless of the space available around the individual, as they are only using the rotational axes and not the additional translational axes that are used with experiences using 6 degrees of freedom (Mindport, 2023).

I have also planned out key aspects of the user experience that I will be including. As mentioned above, to ensure that the experience is accessible for any person, it will be a stationary experience that features 3 degrees of freedom – allowing the viewer to remain physically in one position and look around the space freely. Immersive experiences with 3 degrees of freedom can also minimise the feeling of cybersickness (Thompson, 2023) from the reduced movement the viewer takes. Furthermore, I will also be including a ground plane as a method of grounding the viewer in the experience. If the viewer starts to feel symptoms of cybersickness, they have the ability to look down at the floor – with the ground plane acting as a stable point of reference for the viewer. This, in turn, can mitigate oncoming symptoms of cybersickness and maintain their presence in the experience. (Wu et al., 2021)

As this project is a 360° video, the viewer will be able to look around freely in the space – which poses the risk that they may end up looking in a different direction whilst an animation is playing. Rather than limiting the field of vision to 180°, I plan to make use of leading lights and sound to draw the viewers attention to specific areas of interest throughout the video. Once the video has been rendered in 4K, I plan to also further reinforce and support the leading lights that have the viewer looking in specific directions by adding spatial audio in Premiere Pro. As highlighted in an article by the Medium, “Stereoscopic sound design means audio becomes a hyper-effective tool for directing attention and furthering your story” (Doty, 2017), which is something I would like to recreate in my project. My audio will be sourced from EpidemicSounds, as the quality of audio clips available is extremely high and will not detract from the final piece.

Additionally, in order to keep the viewer feeling safe in the experience, I plan to keep a set radius around the 360° camera as a “safe zone” – ensuring that animated assets and effects do not breach this radius to keep the viewer feeling safe in the experience. I intend for my immersive experience to be interesting and entertaining – transporting the viewer to a fantastical city rather than creating an experience that may cause the viewer feelings of fear and heightened anxiety, which then may result in physical reactions such as raised heart rate and blood pressure (LaMotte, 2017). I personally find the best virtual reality experiences to have a strong narratives and build on the viewers senses – what they can see whilst wearing the head mounted display and what they can hear whilst in the 360° space, which is what I plan to recreate for my project.

PROJECT PLANNING WITH TRELLO/KANBAN METHOD

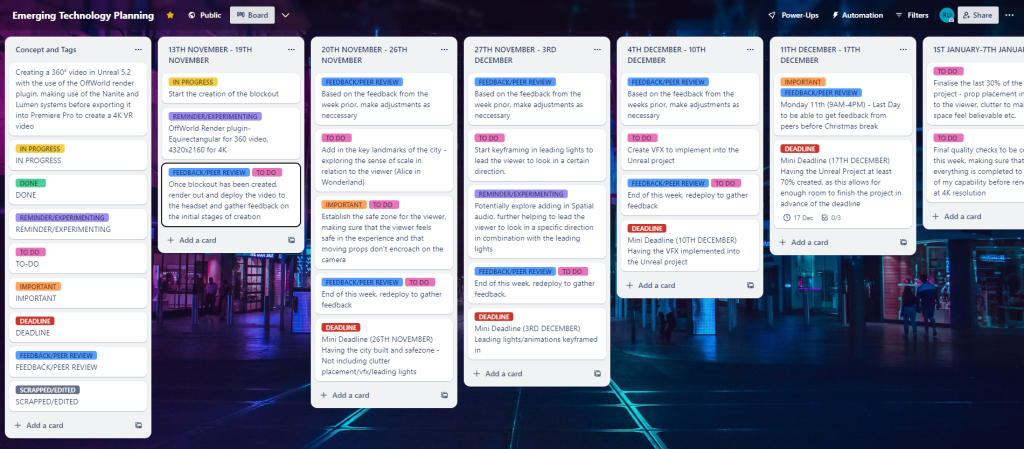

For my project, I am using Trello to effectively plan out my production schedule. Trello is a Kanban method of project planning that I am familiar with and have used multiple times before for various projects. I am a visual learner, and personally find Trello to work very well for me, given that I can see each individual card and move them to various areas as I need to. Personally, I find having the ability to assign various tags and labels to the cards extremely helpful, as I can organise individual cards based on my needs, change, remove and create new tags and set up automation rules for cards that have a deadline approaching.

I have previously tried using other task management softwares such as Hack’n’Plan and Monday.com, but struggled to understand their interfaces and how to use it to the full potential. I decided that instead of trying to learn a new project management software, I would play to my personal strengths and use the method that I have used since starting University.

Emerging Technology Planning | Trello

The Trello board is broken down into a week by week timeframe, with several tasks that I need to complete in order to create my final outcome for the project. By having a structured schedule, it allows for me to keep on track with the project, and in turn, keeps the tasks from piling up and creating an workload that would be nigh impossible – which would then lead to time crunch.

When planning this schedule, it was important that I was realistic with what I could successfully achieve in a week and not create an impossible workload to be done within that given timeframe, as it would then become detrimental to the quality of work I would produce for this project, given that it is also running alongside other my University module that also has a multitude of tasks that need to be completed. By setting myself weekly deadlines for what I aim to have completed, I can steadily tackle the workload as the module progresses throughout the term.

Additionally, I’ve also blocked in time at the start and end of each week to recieve feedback from my peers. By asking for feedback from my peers on a week to week basis, I can recieve a wider range of perspectives on my project, allowing for improvements to be made to create a well rounded final product rather than creating something that has had only had my input. My peers may be able to suggest ways that I can improve the experience that I have not thought of, which in turn, can make it more accessible for a wide range of viewers and improve the final produced video.

I anticipate to have at least 70 percent of the project done before the Christmas break starts on the 17th of December. By setting myself this deadline, it allows for me to keep testing my project with my peers at the University with the shared equipment, recieving vital feedback on my project before the University facilities close for the holidays. This way, as over half of my project has been completed and I have recieved consistent feedback from my peers over the weeks, I then have two weeks to finalise the lighting, prop placement and clutter in the scene, creating a believable and visually interesting space for the viewer to be immersed in.

Moreover, with my created schedule, it allows time to deal with any errors or bugs that might arise, such as potentially having issues with rendering or an animation within the scene. With a planned schedule, I am not at the whims of circumstance and would have ample time to react to any issues or changes to my project, whereas without a planned schedule, I would be forced into a crunch state to finish my project. In turn, that would then be detrimental to my quality of work as I would not be submitting the best piece of work I could have created, entirely due to having poor time management in the project.

THE CONCEPT AND THE STORYBOARD

The concept that I have for this project is to have the viewer placed in the centre of a cyberpunk city, with a variety of buildings near them. The viewer will be a person within the city who is standing on a small raised bridge, observing a number of events occuring near their position in the space – such as holographic advertisements on the sides of buildings, futuristic vehicles flying by and pedestrians passing by them, making the city feel entirely alive.

Initially, when I had first settled on creating a 360° video, I decided to create a small concept of it in OpenBrush and GravitySketch as a means of experimenting with scale, lighting and exploring various ideas that I had.

As this is a stationary experience, with the only movement being the viewer rotating their head within the space, the actions in the video need to occur near to the camera to maintain the viewer’s engagement and presence.

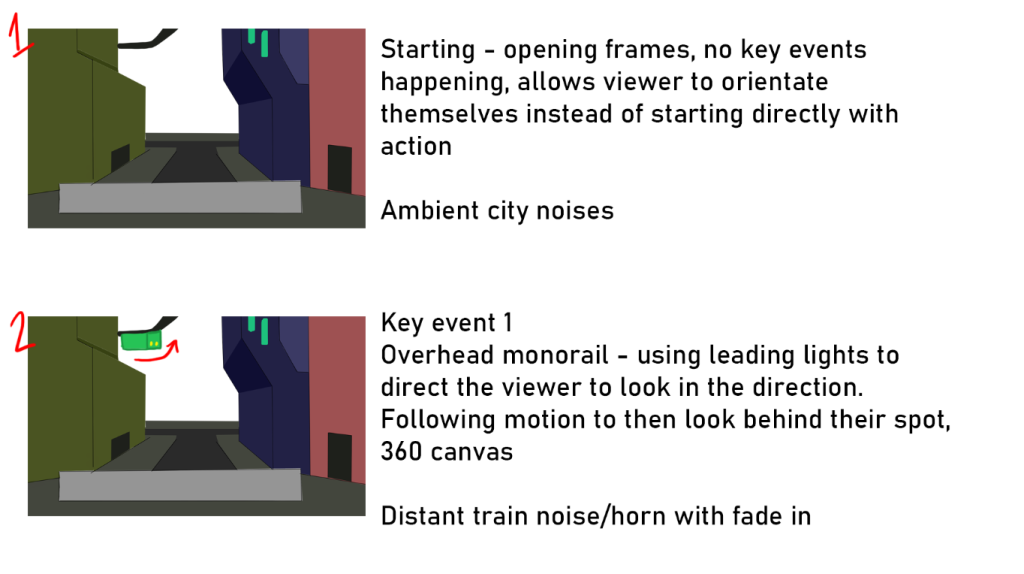

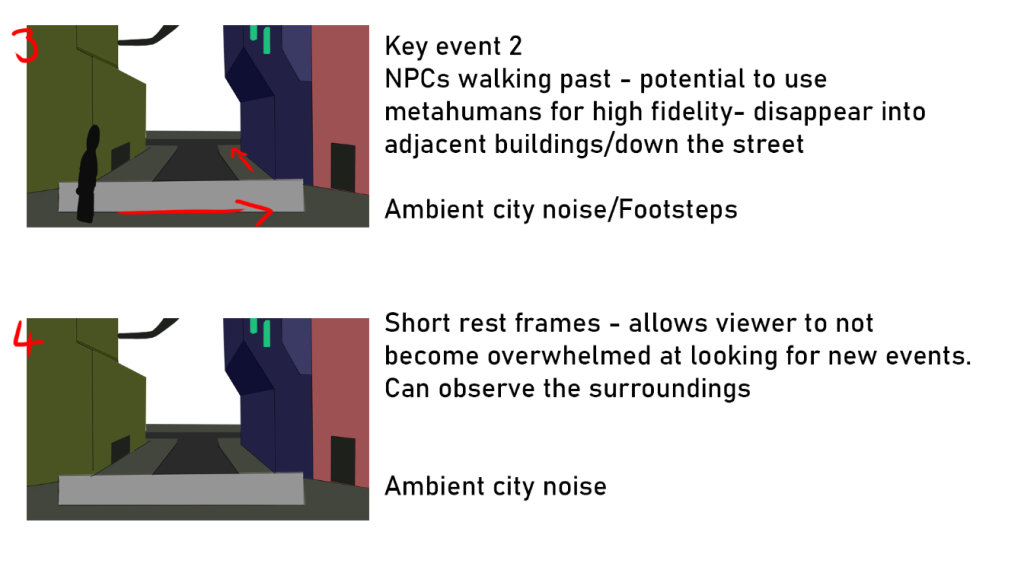

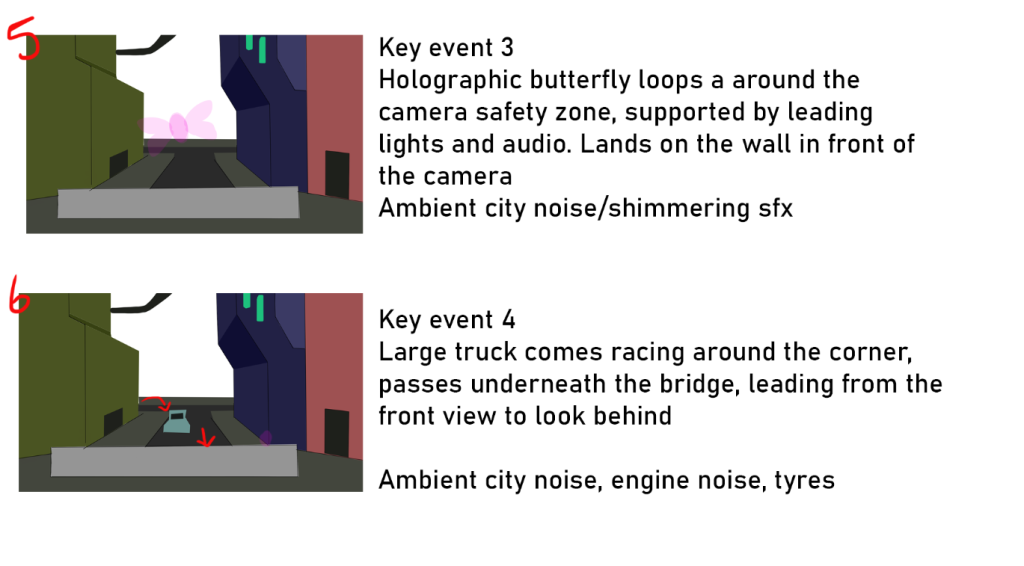

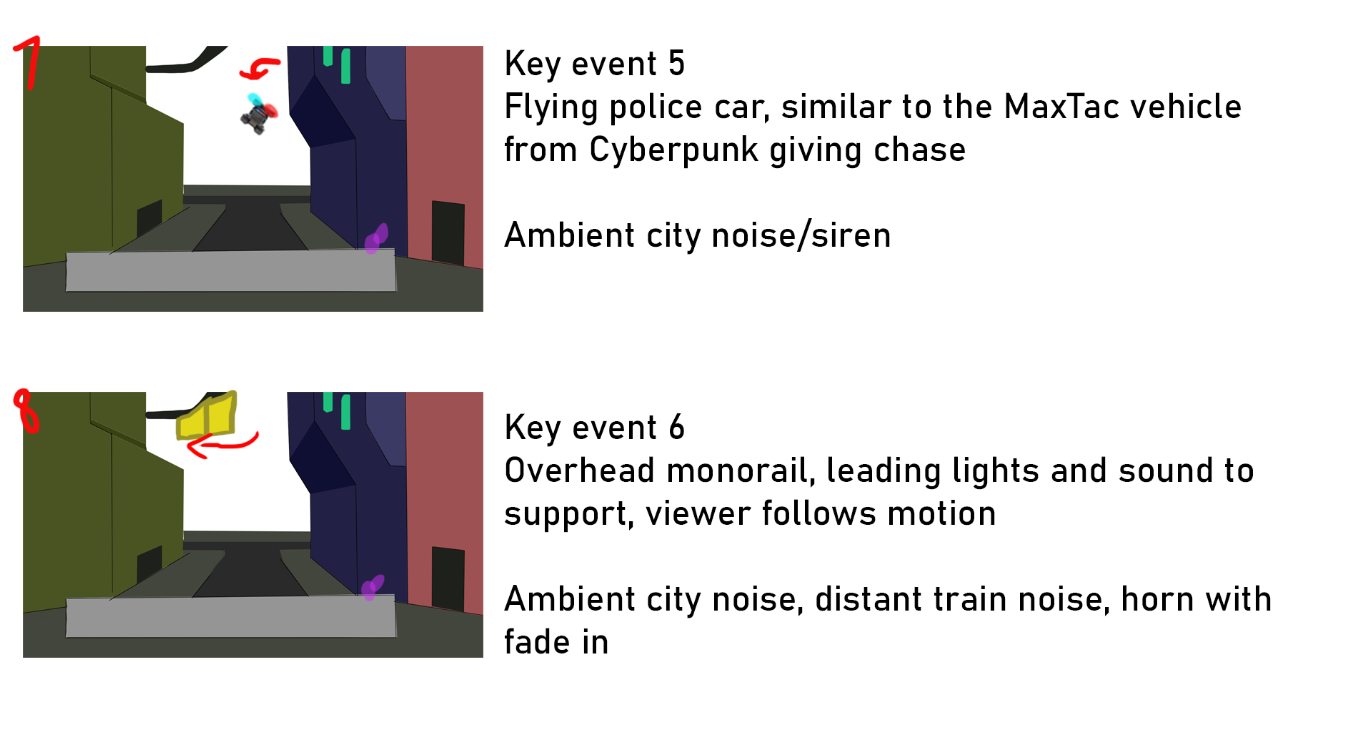

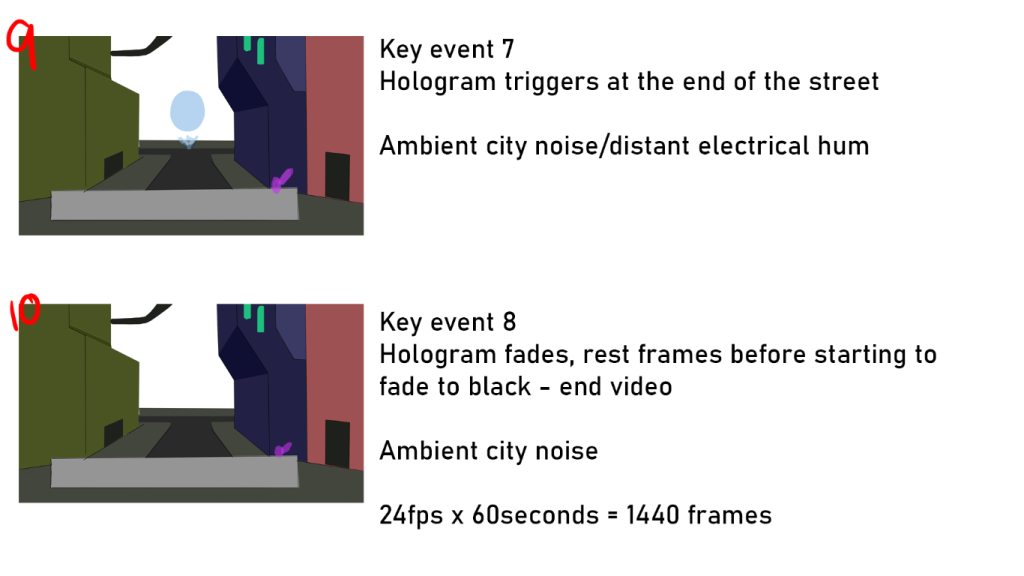

As my project is a 360° video, I decided to make my storyboard from the front facing position rather than attempting to replicate and draw everything that would happen within 360 degrees of the camera. With this storyboard, I wanted to capture a basic form of some of the key events that I want to include in the sequence, such as having a monorail line pass over the cameras position. With the inclusion of motion, the viewer is likely to follow the pathing it takes, causing them to look behind their spot and look at the entirety of the 360° canvas.

I’ve also accounted for having resting frames in the 360° video, to ensure that the viewer is not constantly looking around the space for the next event happening, which might lead to the viewer becoming overwhelemed. Each “event” will be signified by the use of light, animation and audio to the viewer.

Please note, the storyboard frames are clickable, which will open them in a new tab for individual viewing.

REFERENCED MATERIAL:

Ayaga, V. (2023) Is Virtual Reality (VR) Bad for Your Eyes? Vision Center. Available online: https://www.visioncenter.org/blog/is-vr-bad-for-your-eyes/.

CDPROJEKTRED (2020) Cyberpunk 2077: from the Creators of the Witcher 3: Wild Hunt. Cyberpunk 2077. Available online: https://www.cyberpunk.net/gb/en/.

Doty, A. (2017) The pros and cons of using 360 video for your project. Medium. Available online: https://medium.com/secret-location/the-pros-cons-of-using-360-video-for-your-project-839cb34f2255.

Eidos Montreal (2013) Deus Ex: Human Revolution – Director’s Cut. Steam. Available online: https://store.steampowered.com/app/238010/Deus_Ex_Human_Revolution__Directors_Cut/.

ION LANDS (2020) Cloudpunk. Steam. Available online: https://store.steampowered.com/app/746850/Cloudpunk/.

King-Thompson, J. (2017) The Benefits of 360˚ Videos & Virtual Reality in Education» Blog | Blend Media. Blend Media. Available online: https://app.blend.media/blog/benefits-of-360-videos-virtual-reality-in-education.

LaMotte, S. (2017) The very real health dangers of virtual reality. CNN. Available online: https://edition.cnn.com/2017/12/13/health/virtual-reality-vr-dangers-safety/index.html.

Mindport (2023) 3 DoF vs. 6 DoF and why it matters. www.mindport.co. Available online: https://www.mindport.co/blog-articles/3-dof-vs-6-dof-and-why-it-matters

Off World Live (2021) 360 Degree Virtual Camera for Unreal Engine. Off World Live Limited. Available online: https://offworld.live/products/unreal-engine-360-degree-camera

Sheremetov, D. and Slesar, M. (2023) How Virtual Reality Is Changing the Entertainment Industry. onix-systems.com. Available online: https://onix-systems.com/blog/revolutionizing-movie-industry-through-vr-movie-apps.

Thompson, S. (2023) Motion Sickness in VR: Why it happens and how to minimise it. virtualspeech.com. Available online: https://virtualspeech.com/blog/motion-sickness-vr.

Wu, F., Bailey, G.S., Stoffregen, T. and Suma Rosenberg, E. (2021) Don’t Block the Ground: Reducing Discomfort in Virtual Reality with an Asymmetric Field-of-View Restrictor. Symposium on Spatial User Interaction.