When discussing virtual reality (VR) in the present day, the various fields that it can be applied to and enhance are seemingly endless. The entertainment industry is listed as being one of the top leader for VR application and how it can be used (Alcanja, 2022), ranging from having a headset at home to play a VR game to having dedicated cinemas using the technology (Titov, 2020; Sheremetov and Slesar, 2023)

For the second assignment of the Emerging Technologies assignment, I created an immersive VR experience, including spatial audio, VR specific blueprints and high fidelity models. At the bottom of this post will be a reference list of research material discussed in this post only. The reference list of found assets can be found on my post titled “Production Piece” and at the end of the videos embedded in that post.

THE PROJECT OVERVIEW AND DISCUSSING THE PIVOT

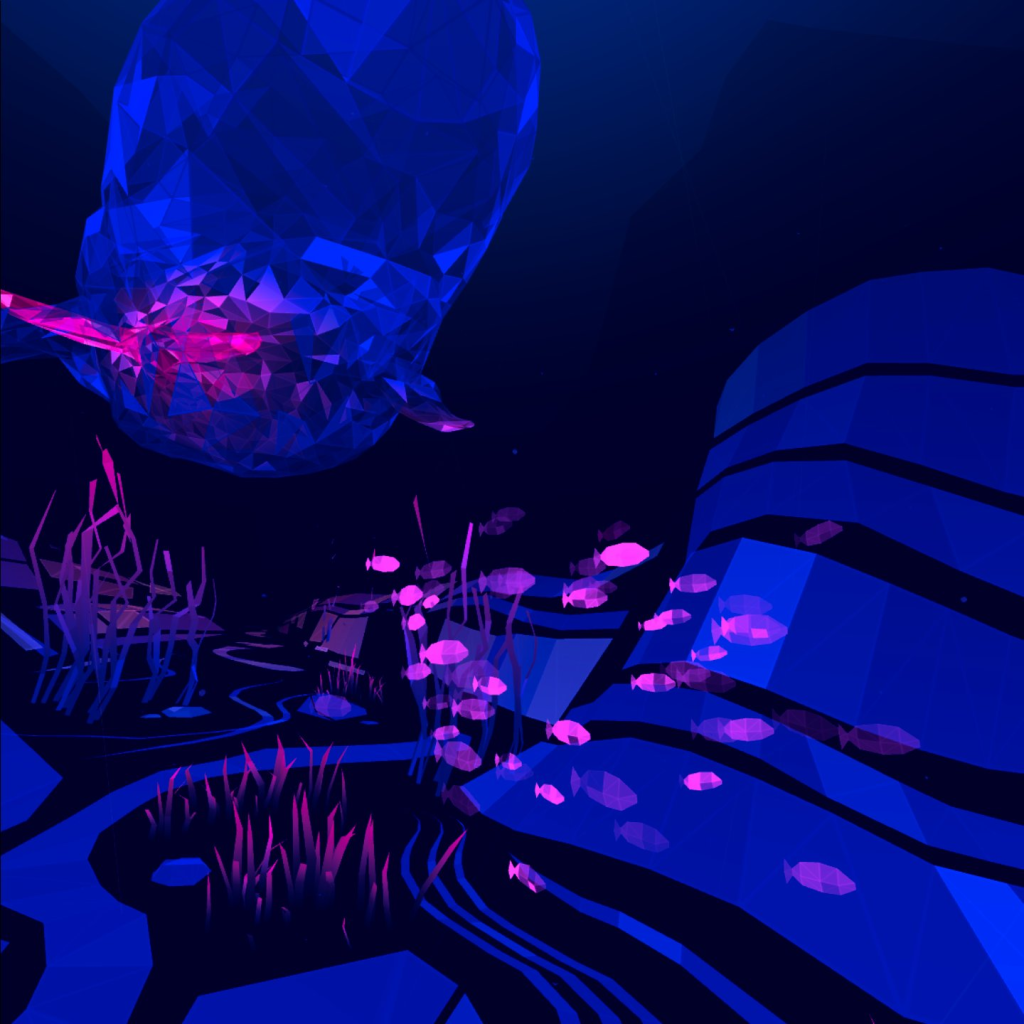

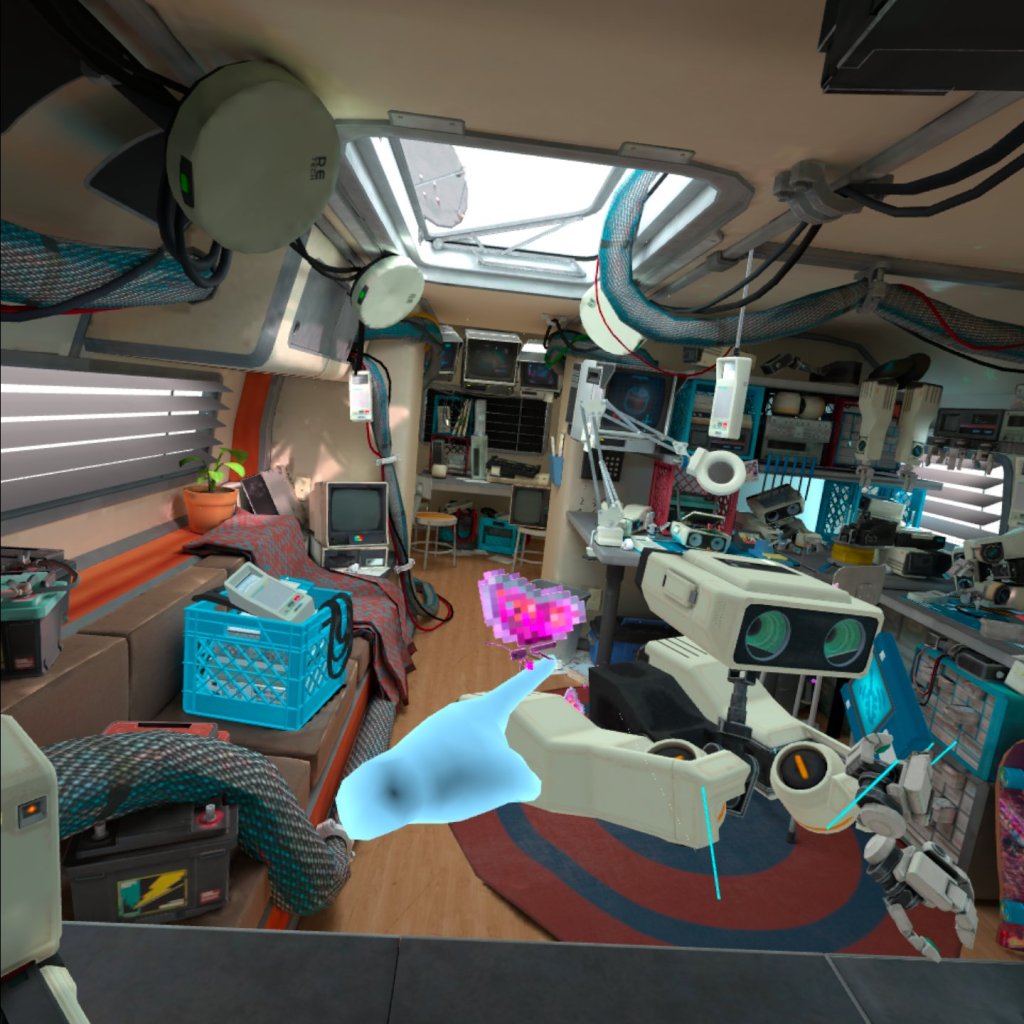

For this project, I created an immersive VR experience within Unreal Engine 5.2, placing the user within a cyberpunk inspired cityscape with the ability to explore and interact with several elements in the scene. The experience features 6 degrees of freedom and uses spatial audio within the level, whilst also making use of several system inside of Unreal Engine, such as Nanite to process high detailed meshes and Niagara for particles. The purpose of my project is purely for entertainment, providing the user with various methods of entertainment within the level in the form of various activities and examples of minigames.

My completed project has pivoted slightly from what I initially proposed in the first assignment, changing from being a 360° degree video, with only three degrees of freedom to a VR immersive experience that has 6 degrees of freedom. With the 360° video, the user would have been restricted to a singular location and events would have occurred around their position, which would effectively place the user in a “fly on the wall” position. The user would not be able to interact with anything around them, which I believe would reduce the engagement of the user – they would be visually transported to a different world, but they can’t interact or explore it. An additional point to note is that cybersickness is commonly reported with 3 degrees of freedom content, as there becomes a disconnect in what the user is seeing versus what the body can feel, leading to symptoms such as nausea and diziness (Lasserre, 2022; Poularakis, Mockford and Meardi, 2022).

In comparison, 6 degrees of freedom content is reported to have reduced reports of motion sickness and disorientated feelings by fully immersing the user and maintaining the presence (Thompson, 2023). Furthermore, using 6 degrees of freedom allows for the user to navigate the level and have the sound change and react as it would in real life (Voyage Audio, 2022). Moving close to an object generating noise would increase the volume, and moving away would reduce it – which would also further maintain the users presence as the body can tell that what it is seeing and hearing makes sense. The audio that they are hearing in the virtual world is acting how it would in reality.

I decided to swap to creating the VR experience over creating the 360° video as I found it to have more potential to be truly immersive and have actual spatial audio that the user can react to rather than attempting to replicate spatial audio in the post processing stage, which would not be as accurate.

IDEA GENERATION AND RESEARCH

My production piece started out from my personal inclination for dystopian games and films, such as the cyberpunk genre. I find the designs that are often featured in this genre extremely interesting, be it a character design, a vehicle or a building.

Additionally, at this time that I started to think about which direction I wanted to take the second assignment in, I had recently purchased a Meta Quest 2 headset. My experience with VR was fairly limited at the time aside from occasionally painting in Tilt Brush on a family members headset, so I had decided to go through Oculus: First Steps and Meta’s First Hand to reintroduce myself. I found it rather entertaining to be transported into an entirely different world and interact with various elements without repercussion. I could knock over an entire tower of cubes repeatedly, throw paper airplanes and take a moment to truly look around the environment that I am in – whereas most games would have a prompt constantly reminding me that I’m not completing an objective to further a quest line.

From this, I started to look and research into other VR games that had a similar level of freedom that both First Hand and First Steps offered the user, and only found two other games that were comparable to these programs. The two games that I found to be similar was Oculus’s First Contact and Owlchemy Labs Job Simulator; Both games, in essence, allowed the user to experience it at their own pace and how they wanted to, without having constant reminders of what the game believes they should be doing to progress. If the user wanted to drink coffee, throw bouncy balls over the cubicle walls or eat donuts repeatedly in Job Simulator, they could. In First Contact, if they wanted to repeatedly create butterflies, fire off rockets or constantly wave at the robot companion in First Contact, they could. The games did not stop the user from experiencing it how they wanted to, which I found to be enjoyable.

I decided to take the freedom that I experienced when playing the aforementioned games, and combine them with my own personal inclination for the cyberpunk/dystopian genre. I wanted to create a VR experience that allowed the user to experience it however they wanted to and included true spatial audio, building the user’s presence by truly immersing them in this world that I was creating.

PROJECT MANAGEMENT

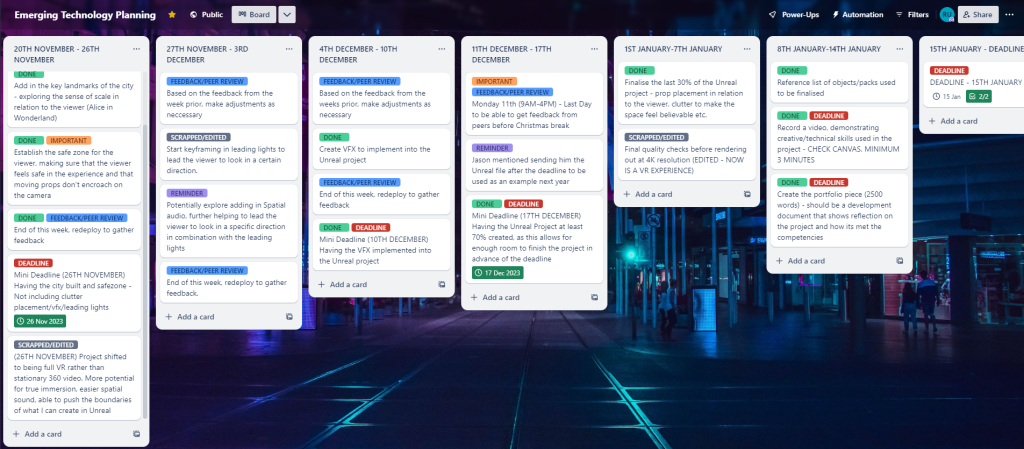

With this project, I used the Trello, which is a Kanban Method of project planning for blocking out time to work on the project. As mentioned at the start of this post, I was originally creating a 360° video, yet pivoted into creating a VR experience on the 26th November. I already had the city scape blocked in at this stage, which allowed for me to focus on setting up the blueprints required for creating a VR experience.

Link to my Trello Board: Emerging Technology Planning | Trello

When I decided to change to making the VR project, some of my tasks changed as they were no longer needed in this version. As an example, I was previously going to have leading lights to direct the user to look in specific directions, yet this was no longer needed as the user could move around and look wherever they desired. I had roughly 70 percent of the project completed by the 17th of December, meeting my own personal deadline that I had set myself, leaving myself some minor tasks such as set dressing and tweaking some blueprints to do over the remaining weeks. When I initially made this schedule, I made the workload manageable on a week by week basis and steadily worked away at it – not leaving it to the last minute and giving myself an impossible workload to tackle within a shorter timeframe.

My planning was beneficial, as I did end up having a few bugs and errors to tackle in the first week of January. At this time, all that was left to do on the project was set dressing and some minor tweaks and finetuning to a couple of the blueprints, allowing me more time to focus and deal with the issues at hand. If I did not have this plan in place, I would have been creating blueprints, retargeting animations, set dressing and fixing the issues in the project, effectively being forced into a crunch state in order to present a finished project.

My main challenge faced during this project was the blueprinting. As mentioned in the narrated video, I am a 3D artist first and foremost – and coding/blueprinting is not my strong suit. My skill level with blueprinting is best described as being through trial and error – I stay with the blueprint until it ends up working by a miracle, or it is unfortunately entirely broken. To help myself with this project, I opted to predominantly follow a Udemy tutorial titled “Unreal Engine 5 VR Blueprint Crash Course” by Artem Chaika to build the majority of the interactions that are featured in the level. The course is aimed at a beginner skill level, so I found it easy to follow the tutorial and create what I required.

SOFTWARE PROFICIENCY

As mentioned above, I was using Unreal Engine 5.2 for this project. My knowledge of blueprinting in Unreal is not the best and can be improved, which is why I relied on the Udemy tutorial and several YouTube tutorials in order to create the VR experience seen in the video.

The audio that I included in the scene was sourced from Epidemic Sounds, as the quality of the audio is extremely high every time that I have used their content. Some of the audio clips were slightly too loud on my initial testing, which resulted in the audio clips being edited and adjusted to achieve a better result.

The monorail and the cars that can be seen in the video are moving by following specific splines that relate to their specific blueprints. I followed Matt Aspland’s video on how to make an object follow a spline path, as other methods of getting the vehicles to move involved setting up Chaos Vehicle physic simulations and then setting up AI to control the vehicles, which at this point in time, is out of my skill set. I feel that having the cars and the monorail move around the play space makes the area actually feel alive and lived in. As the play space resembles a city scape, I thought that it would be better to have moving elements to make the area feel populated and larger than it actually is, rather than being a desolate, unpopulated city.

I was also able to make use of Epic Games metahumans to provide a new element for the player to look at and inspect, changing how I had originally planned to use them to better fit into the VR medium. As the user can teleport around the scene, I wanted to have the metahumans in set locations that the user can choose to visit. I’ve experimented previously with metahumans, so I was somewhat familiar with how to use the metahuman creator, how they work and how to edit certain parts within Unreal Engine. The metahumans that I used as a base point in the project were Zhen, Erno, Ettore and Vivian; These metahumans were then edited to fit into the environment. If this project had a longer timeframe, I would have experimented further with the inclusion of metahumans in the scene and researched how to apply custom clothing.

When a metahuman is initially brought into the Unreal engine level, they appear in the standard t-pose. I wanted to use metahumans in the project, but did not want to populate the level with several metahumans stood in a t-pose, as I believe that would reduce the user’s presence purely on the base pose. I decided that I would use Mixamo animations, such as a sitting animation and retarget them to the metahuman base skeleton, making the metahumans appear more realistic to the user and maintaining the presence in the scene.

One of the elements that I unfortunately had to remove was having the player’s vision fade to black, and then fade back in when they would change level. I wanted to have this fade in to reduce how noticeable it is when the player changes level, as having an immediate change in the level and consequently, a change in the surrounding environment, can be quite disorientating for the user. In the testing phase of this feature, the fade in worked successfully, but for a reason that I do not understand, it meant that the user could no longer teleport or interact with anything in the scene. I tried continuously to get this feature to work, but unfortunately had to remove it in order to present a finished project.

ETHICAL CONSIDERATIONS AND THE USER EXPERIENCE

This experience has 6 degrees of freedom, which allows the user to look around freely on all axis and then being able to move on the axis as well. With immersive VR content, cybersickness might affect users differently, with one user who might not feel the effects of it and another who does. As a result, one of the elements that I made sure to include was a plane underneath the user to act as a constant reference point, so that if a user does start to feel symptoms of cybersickness, they have the ability to look down at the ground plane and are able to ground themselves to reduce the cybersickness symptoms (Wu et al., 2021; Whittinghill, 2015).

The only exception to the user being able to ground themselves is the climbing activity. This activity however is optional and the user has the freedom to decide whether or not they want to experience it – it is not mandatory by any means. This allows for each user who navigates the space to experience the level however they want without having a prompt direct them in a specific location to complete a specific task. As an example, one user may have a fear of heights and may decide to not experience the activity, whereas another user might decide to start the climbing activity and go the entire way.

Additionally, to ensure that the user remains feeling safe in the experience, I limited where they were able to teleport with the use of navigation meshes (Nav Mesh). I did not want the player to be able to teleport into the middle of the road, and be met with vehicles coming towards them, causing fear and anxiety for the user. A study conducted by researchers from the University of Copenhagen on emotional responses when using virtual reality found that fear was most prevalent when objects were rushing towards them (Hurler, 2021). This, in turn, can cause a variety of things to happen, such as having a raised heart rate and heightened anxiety from the content the user is seeing, to even accidents as the user tries to run away from the environment, leading them to hitting walls and furniture around them.

Furthermore, another element that I was considerate about was how loud the audio sources were in the level. Predominantly from what I researched, when a headset connects to a computer, the volume is set relatively high by default. Additionally, if I had not edited the audio clips that I had used in the level and had left them at their base volume that they were when downloaded from Epidemic Sounds, in combination with the high volume on the headset, it poses a risk to the users hearing (LaMotte, 2017). To ensure the users safety in the scene, the audio clips were lowered to a safer level.

FORWARD THINKING AND EMERGING TRENDS

I believe that my project demonstrates forward thinking by making use of new systems such as Unreal Engine’s nanite system for handling higher demanding meshes. I used Unreal Engine 5.2 as in comparison to Unreal Engine 5.3, it is more stable and has less bugs in the engine software.

I used nanite for processing the buildings seen in the scene, as without nanite, Unreal Engine consistently displayed the “Video Memory has been exhausted” warning message and degraded the quality of everything in scene. As a result of using nanite, the warning message was removed, and I could create an interesting and immersive experience for the user to navigate and explore.

I believe that the direction that virtual reality is heading, the limitations of VR headsets will become less and less and opportunities to push the boundary of this medium will rise. I think that for entertainment purposes, virtual reality has a multitude of directions that it can be taken and improved on in the coming years to make the user experience truly enjoyable and immersive.

REFERENCES

Alcanja, D. (2022) Virtual Reality Applications: 10 Industries Using Virtual Reality in 2022. www.trio.dev. Available online: https://www.trio.dev/blog/virtual-reality-applications.

Aspland, M. (2022) How To Make An Object Follow A Spline Path – Unreal Engine Tutorial. www.youtube.com. Available online: https://www.youtube.com/watch?v=-V6D5WtemMI

Chaika, A. (2023) Unreal Engine 5 VR Blueprint Crash Course. Udemy. Available online: https://www.udemy.com/course/unreal-engine-5-vr-blueprint-crash-course/.

Hurler, K. (2021) How virtual reality causes (and cures) fear. Advanced Science News. Available online: https://www.advancedsciencenews.com/virtual-reality-fear/.

LaMotte, S. (2017) The very real health dangers of virtual reality. CNN. Available online: https://edition.cnn.com/2017/12/13/health/virtual-reality-vr-dangers-safety/index.html.

Lasserre, S. (2022) VR motion sickness: how to reduce it in your professional use? blog.techviz.net. Available online: https://blog.techviz.net/vr-motion-sickness-how-to-avoid-it-in-your-professional-use.

Meta (2023) First Hand on Meta Quest | Quest VR games. www.meta.com. Available online: https://www.meta.com/en-gb/experiences/5030224183773255/

Oculus (2016) Oculus First Contact on Meta Quest | Quest VR games. www.meta.com. Available online: https://www.meta.com/en-gb/experiences/2188021891257542/

Oculus (2019) First Steps on Meta Quest | Quest VR games. www.meta.com. Available online: https://www.meta.com/en-gb/experiences/1863547050392688/

Owlchemy Labs (2016) Job Simulator on Meta Quest | Quest VR games. www.meta.com. Available online: https://www.meta.com/en-gb/experiences/3235570703151406/

Poularakis, S., Mockford, K. and Meardi, G. (2022) IBC2022 Tech Papers: Data compression for 6 degrees of freedom virtual reality applications. IBC. [online] Available online: https://www.ibc.org/download?ac=21868

Sheremetov, D. and Slesar, M. (2023) How Virtual Reality Is Changing the Entertainment Industry. onix-systems.com. Available online: https://onix-systems.com/blog/revolutionizing-movie-industry-through-vr-movie-apps.

Thompson, S. (2023) Motion Sickness in VR: Why it happens and how to minimize it. virtualspeech.com. Available online: https://virtualspeech.com/blog/motion-sickness-vr?ref=footer.

Titov, D. (2020) Virtual Reality in Entertainment: Best Apps and Approaches – Invisible Toys. Augmented Reality Toys. Available online: https://invisible.toys/virtual-reality-development/virtual-reality-entertainment/.

Voyage Audio (2022) Spatial Audio For VR/AR & 6 Degrees Of Freedom – Voyage Audio. Voyage Audio. Available online: https://voyage.audio/spatial-audio-for-vr-ar-6-degrees-of-freedom/

Whittinghill, D. (2015) Technical Artist Bootcamp: Nasum Virtualis: A Simple Technique for Reducing Simulator Sickness in Head Mounted VR. www.gdcvault.com. Available online: https://www.gdcvault.com/play/1022287/Technical-Artist-Bootcamp-Nasum-Virtualis.

Wu, F., Bailey, G.S., Stoffregen, T. and Suma Rosenberg, E. (2021) Don’t Block the Ground: Reducing Discomfort in Virtual Reality with an Asymmetric Field-of-View Restrictor. Symposium on Spatial User Interaction.