Virtual Reality technology has expanded beyond the entertainment industry – with the technology being applied to the healthcare industry, simulations and for educational purposes (Pottle, 2019; Sala, 2021; Wong, 2021).

The finished project builds on the use of virtual reality for educational purposes with the creation of a virtual classroom, intended for teaching the classical sciences of Biology, Physics and Chemistry (Encyclopedia Britannica, 2020) to high school aged students. As demonstrated in the accompanying recorded footage, the purpose of this project was to familiarise new high school students with the equipment found within a science classroom in a safe manner.

The reasoning behind creating a virtual classroom was primarily due to the wide range of directions that an educational virtual science experience can be taken. Students would be able to familiarise themselves with the equipment, experiments and scientific concepts in an environment that causes no physical harm to themselves or their peers. Furthermore, in this example, Special Educational Needs and Disabilty (SEND) students who may require further assistance with transitioning into a new teaching area, schedule or require additional time when learning can benefit from Virtual Reality and recreation of their classroom. The student would be able to familiarise themself and gain confidence within the virtual environment before they physically attend, making a smooth transition in their education (ClassVR, 2017).

An additional example would be how a virtual classroom would be intrinsically more accessible to students and reduce the impact on an institutions finances. By making content available for a virtual space, students would still be able to learn on a 1-1 basis even when physical equipment, time or space within the classroom is a limitation (Bourguet et al., 2020). College level students who are studying the sciences could create and conduct more complex experiments within a virtual environment with no financial detriment to the institution. New materials and reactants that are found on different continents and cannot be transported can then be replicated digitally, opening the sciences further for all interested parties.

An additional reason behind creating a virtual classroom for my project is due to student perception of the sciences. A study conducted in 2023 found that student’s perception on the sciences was generally negative, regarding it as a “difficult subject”, with most students in this study “encounter[ing] practical work via videos” (Hamlyn et al., 2023). If a virtual classroom was provided as a resource to schools, one that can be edited and changed to create a number of experiments – it provides students with new ways to learn and engage with the content, potentially changing the general perception.

This project was completed using Unity’s game engine and made use of the Meta Interaction SDK for the user’s input, movement and interactions within the scene. Teleportation was chosen as the main form of movement within the scene over smooth locomotion in order to reduce potential cases of cyber or simulation sickness through the perceived motion (Prithul, Adhanom and Folmer, 2021; Caserman et al., 2021). In addition to having teleportation, the user’s rotation was set to snap rotation rather than smooth rotation as another means of reducing potential cases of cybersickness. Smooth rotation and locomotion are more commonly attributed to cyber and simulation sickness due to the user perceiving that their body is moving – creating a disconnect between what they can see and what the body is interpreting that it can feel, leading to the potential of cyber sickness (Thompson, 2020; Tu, 2020).

DESIGNING THE PROJECT

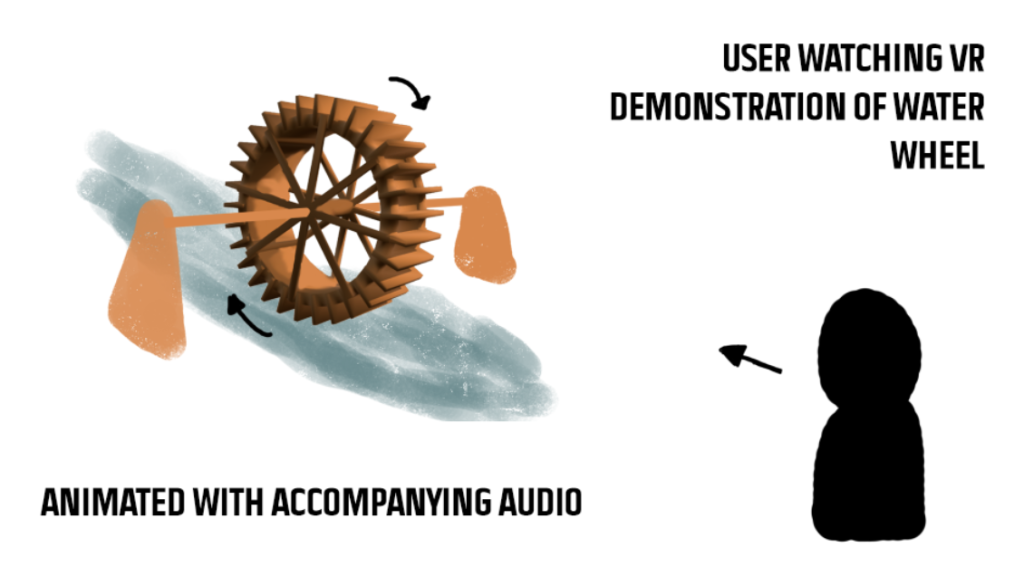

The initial phases of this project was primarily experimenting with the overall idea for this project and seeking feedback from the course staff and my peers on my ideas. One of the initial ideas that I had for the project related around the idea of viewing a water wheel in a virtual environment. The initial intent for this project was to act as a teaching resource for both history and the sciences, covering both the historical and scientific sides of the water wheel in the virtual replica by demonstrating how the waterwheel improved the lives of those who used them and how the kinetic energy was then harvested and then utilised. With the user wearing the virtual reality headset, they then would have been able to walk around the animated model whilst audio would play.

When discussing this idea with my peers and the course staff, the conclusion was that this idea did not push the boundary of what I could achieve, which led to the project being redesigned after continuous discussions. The initial idea had taken a stereotypical textbook-reliant topic and brought it into the virtual world, but in this format, did not allow for the user to engage in any practical, albeit virtual, work and could potentially result in the user disengaging from the content that they were presented.

With this in mind, the project was redesigned. The core idea of using virtual reality for educational purposes remained, but had now shifted to focus on allowing the user to interact with the content in a hands on manner. An additional element that remained from the initial idea described above to the redesigned project was the relation between the virtual project and the sciences – as building a resource to aid the sciences in my opinion has more potential for student engagement.

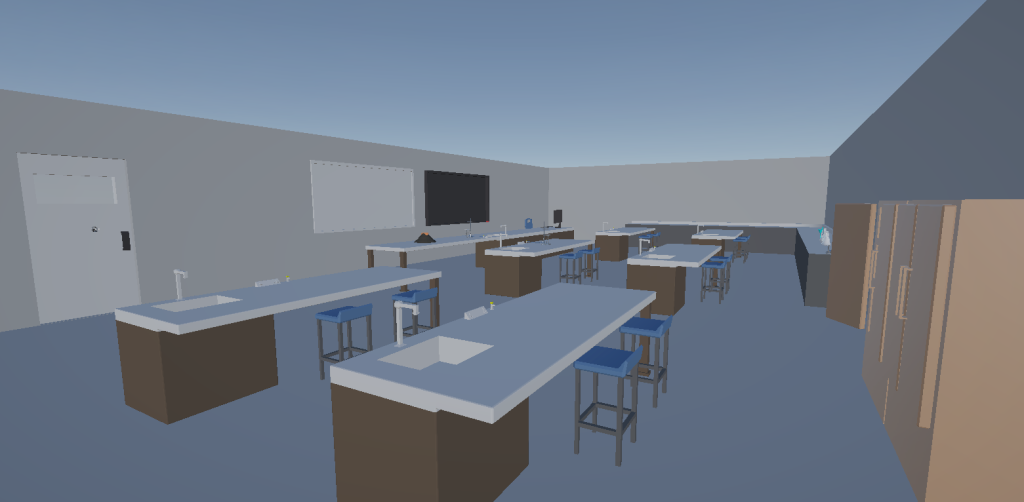

The redesigned project had significantly more interactions planned and allowed for the user to engage with the content themselves, taking the science elements featured in the initial project and expanding on them further. The visuals of the project are based on a UK science classroom and have been recreated in a low polygon and stylised fashion in order to reduce demand on the VR headset – as high polygon scenes that are poorly optimized can result in poor framerate, which can add to potential cases of cyber or simulation sickness due to a delay between visuals (Zhang, 2020; Feyerabend, 2017).

(Benchmark Products, 2021)

The intended purpose of the redesigned project is for it to be used as a resource to teach and familiarise younger high school students scientific concepts, equipment and the classroom itself in a safe manner. Some of the equipment that is used in both scientific experiments and in the science classroom themselves can be dangerous when mishandled due to student inexperience and neglect. As an example, the use of scalpels in biology when demonstrating dissection, glassware, chemicals and open flames in chemistry experiments and electrical equipment in physics can all be a potential danger if mishandled or misused by a student, not only to themselves but also to those around them (Vatix, 2024). A virtual environment on the other hand, where a student can familiarise themselves with how to use the equipment in a safe manner, poses no dangers to those around the user and the user themselves.

PROJECT CREATION:

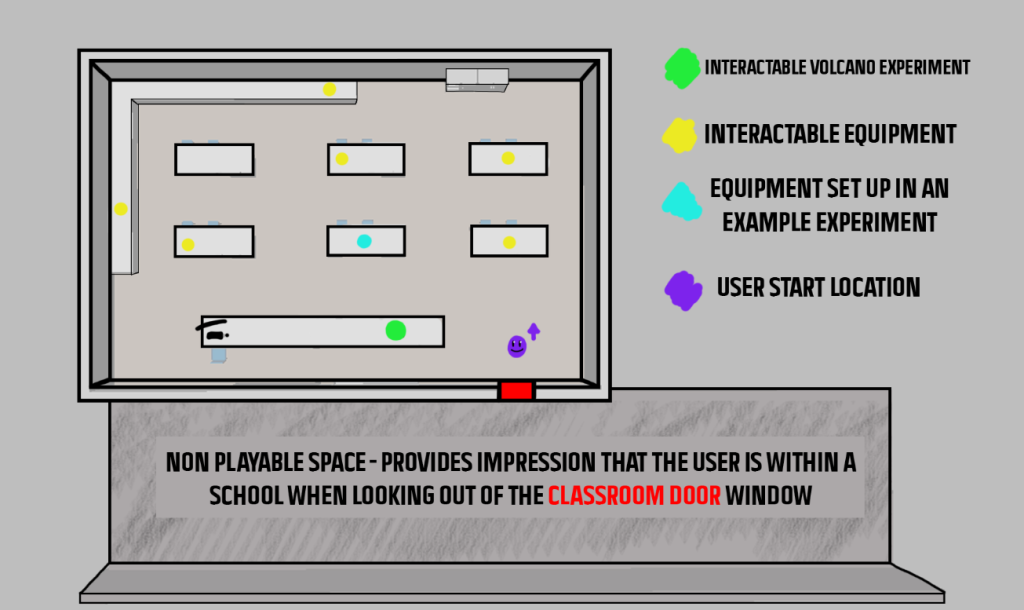

With the project idea now finalised, the creation process could begin. To start with, I modelled the layout of the classroom in Autodesk Maya, ensuring that my created assets for this project remained at a low polygon count for better hardware performance. This classroom asset had several iterations of its design, as the asset was repeatedly brought into the Unity file to be deployed to the headset in order to test that the dimensions of the room were correct for an educational experience; Having a room too large could make the experience feel daunting due to how large it is, whereas having a room too small could make it feel claustrophobic and make the whole experience negative for the user.

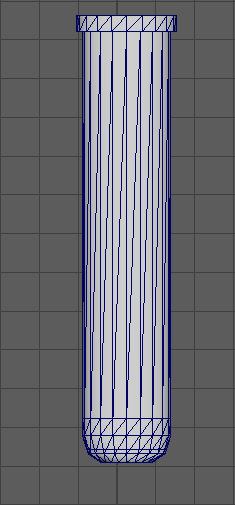

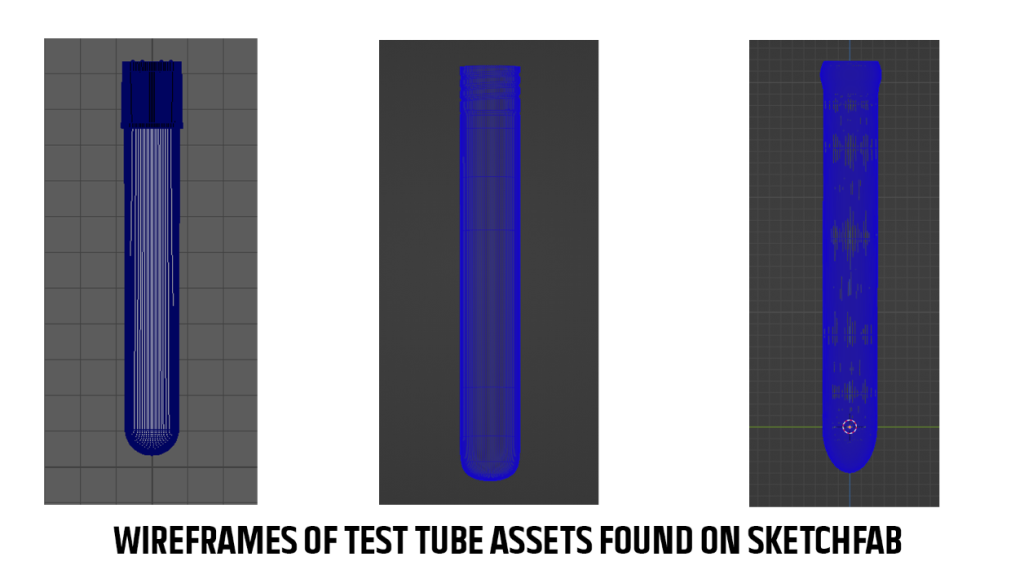

The assets seen within the unity project were also hand modelled in Autodesk Maya – as when searching public marketplaces for assets to use in the scene, the polygon count was rather high. To illustrate this further, we will conduct a comparison between the polygon count of my test tube asset versus the assets found on public marketplaces such as Sketchfab and FAB. My test tube asset is composed of 400 faces with a simple glass material, whereas the test tube assets that are available on Sketchfab were ranging from 25,000 faces to 65,000 faces with numerous materials set up. As seen in the wireframe images below, the found assets were significantly higher polygon count.

Additionally, as I had planned for these assets to be duplicated in the space – I needed all of my assets to have a low polygon count. If I were to use found assets such as the ones featured above and duplicate them within the Unity project, there would be a significant strain on the VR headset’s hardware.

With the assets completed and fitting the desired low polygon count, the next step for elevating the virtual reality experience was the creation of the experiment itself. A common experiment that is conducted when demonstrating science to prospective and young students is a baking soda and vinegar volcano, demonstrating both the chemical reaction between an acid and a carbonate and the subsequent product of that reaction and additionally, how to be safe when conducting experiments (University of Warwick, 2014; Brookshire, 2020).

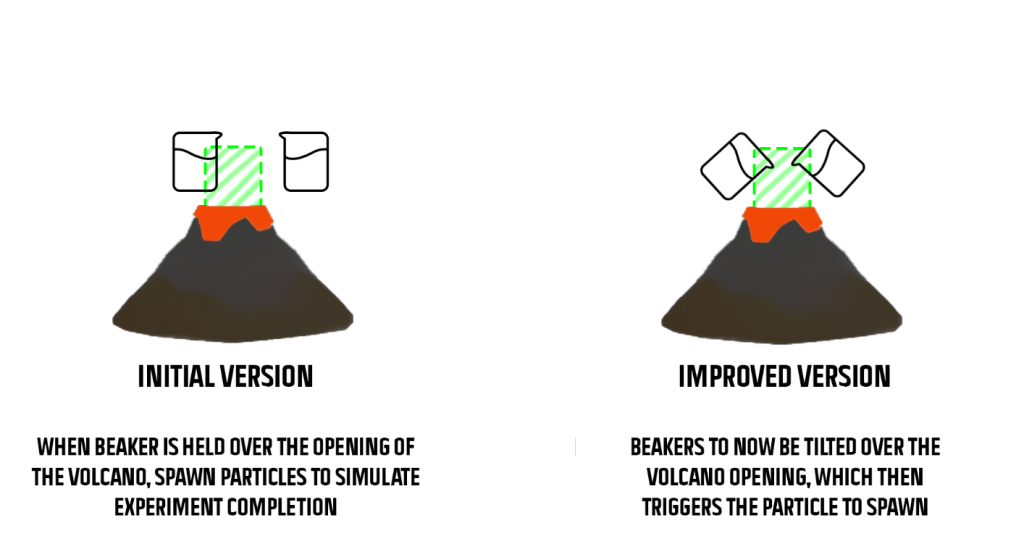

The assets relating to the volcano experiment was placed on the large desk at the front of the virtual classroom, and made interactable with the use of the Meta Interaction SDK – allowing for beakers to be picked up and set down, playing a short audio track to accompany this interaction through the use of the FMOD Unity plugin. With the aid of a custom C# script, when two beakers were picked up and held over the opening of the “volcano”, coloured particles spawned to signify the completion of the experiment. I decided to improve on this further by implementing a tilt mechanic to the beakers, meaning that the user has to tilt the handheld controllers in order for the experiment to now be completed. Additionally, as the user tilts the beaker, the action is further reinforced by playing a short audio track of liquid being poured – providing the user with an element of realism from the virtual action reacting how it would in real life. In turn, this maintains the users presence in the experience and can keep them engaged with the content, as the user is able to understand that what they are seeing and hearing makes sense (Voyage Audio, 2022).

The interaction system was then applied to the other “equipment” assets in the scene and was also applied to the information cards – as the volcano experiment that is featured is only one of the interactive elements that was planned. Throughout the classroom are other pieces of scientific equipment that are commonly used at a high school level, with an accompanying information card. The user can interact with both the equipment and the card, with accompanying audio that plays upon the user setting an equipment piece down. This was done to allow for the user to explore the space and interact with more than just the volcano experiment, furthering my intentions for the virtual classroom and how it was intended to teach and familiarise new high school students.

Unfortunately, there were a few issues and challenges throughout the stages of creation for this project – such as having to reset and re-add audio for when the beaker was tilted at a 45 degree angle and how the teleportation system had broken whilst developing the virtual classroom.

When implementing the tilt mechanic to the experiment, the line of code in my script that triggered the audio clip had stopped firing – despite updating the code to include the detection of the beaker’s rotation. This issue was confusing, as in all intents and purposes, the code should have worked outright; There was no issues detected in the console log relating to this script and the interaction for the beakers still worked as intended, but the audio had completely broken itself. In order to fix this, I decided to proceed through the FMOD set up wizard once again, declaring where the FMOD session was stored and re-adding the FMOD listener to the VR camera rig. Additionally, I reset the FMOD event emitter component on the beaker asset, resetting and re-declaring the play event states and event pathways – which resulted in the audio now playing correctly when the beaker was tilted.

The teleportation system that was being used to allow the user to move around the scene had broken part way through this project. Upon the build being deployed to the headset and the user then loading the experience, they would find themselves unable to teleport and the camera significantly higher than the original, normalised height. This was rather disorientating when testing the build, and required immediate attention to fix.

Upon searching the settings and inspector for the VR camera rig, I could see nothing that was affected. All of the settings were correct and had not been changed, which is extremely confusing as to why the teleportation had broken suddenly. Further investigation into the build settings and rebuilding the nav mesh component for the teleportation mechanic had yielded no results, which led to me ultimately seeking the assistance of staff for this issue. With the help from staff on this issue, we completely removed the affected camera rig, updated the interaction SDK within Unity to version 71 and then reimported a new camera rig.

This seemingly fixed the issue, but had inadvertently caused another issue. The previous camera rig had been set up to have snap rotation set as the primary rotation method and have the FMOD listener implemented – which had been lost upon removal and reimportation – resulting in no audio playing through the headset and smooth rotation. These two issues were solved by proceeding through the FMOD set up wizard once again, and switching the rotation type within the inspector for the camera rig.

USER EXPERIENCE EVALUATION

For this project, user experience evaluations were conducted throughout the duration of the project to ensure that the virtual environment worked as intended and that the experience could be deployed onto any headset for any potential students to use.

Explorative Usability Testing was my primary method of assessing how the project was progressing, conducted towards the start of the project to assess sections of my design (Peters, 2021), alongside showing the course staff and my peers my progress on the project and asking for their input on what I had created and what could then be improved. Repeated tests regarding the methods in which the user would navigate, interact, rotate and how the audio would play in order to ensure that it would work correctly each and every time that the experience was deployed on the virtual reality headset. In turn, this allowed for identifying issues relatively early on in the project, resulting in very minimal edits having to be made to the project and the project idea on the whole. Additionally, with the consideration that this project is designed around being an educational experience, I repeatedly tested various level of information, the tonality and even the fonts to include on the prompts for the experiment and the information cards positioned near the equipment. This was done due to the potential that users who may be new to virtual experiences may require additional assistance as they explore and engage, but do not require a block of text to read which might be ignored.

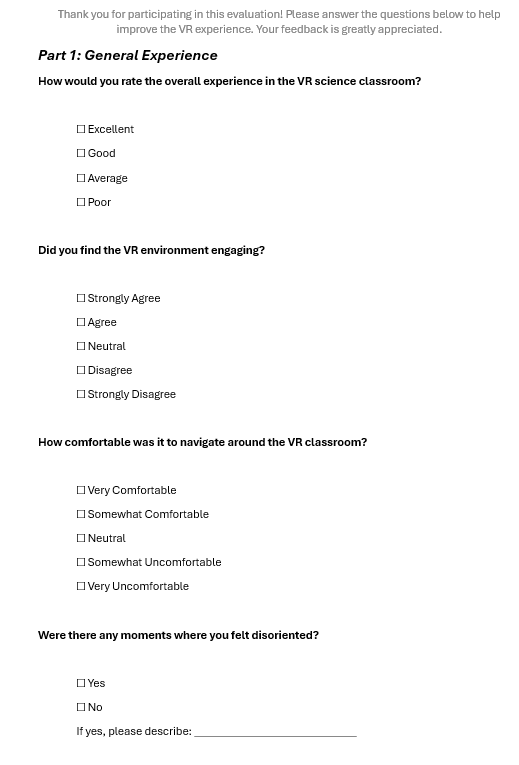

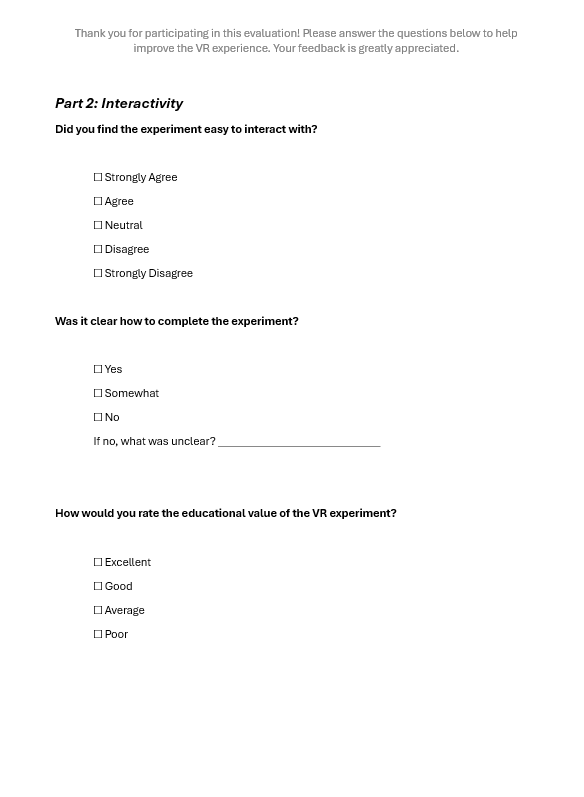

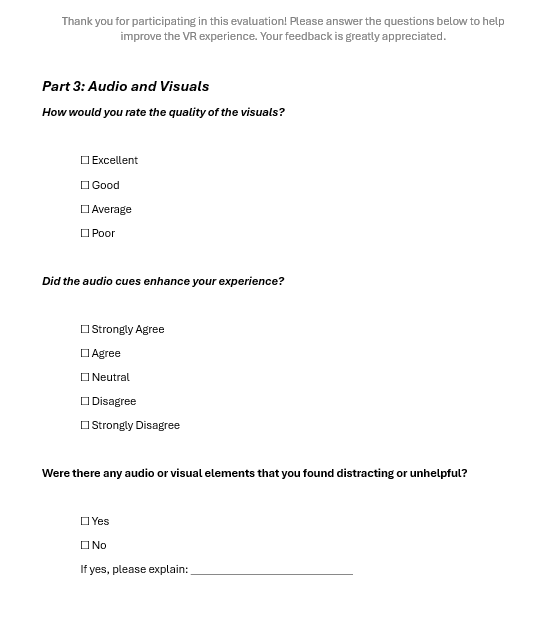

Furthermore, I had also created a questionnaire that could be given to students if this educational experience proceeded to a public testing stage. The questionnaire made use of the Likert-scale, a scale commonly used to measure opinions and perceptions (Sullivan and Artino, 2013; Jamieson, 2024) for the questions, with a scale of answers to choose from and additional areas to write in a custom answer. This questionnaire, visible below, would be presented to students upon exiting the educational experience and their feedback would then be applied to any potential redesigning.

Questionnaire that would be presented to students if the experience proceeded to a public testing state.

REFLECTION

Throughout the duration of this project, there were several skills that were introduced, practiced and improved upon, primarily regarding the use of the Unity game engine and C# scripting after several years of inactivity with it. Repeated engagement with the tools and plugins of the game engine allowed me to refine my skills, regain familiarity with the interface, troubleshoot issues more effectively and approach the challenges of my project with a greater sense of confidence. Previously, when faced with significant challenges and issues, my approach was more akin to a brute force method – fixing the issues by redesigning entire sections of my work. As I regained familiarity with Unity’s game engine, I was able to diagnose the majority of these issues faced and resolve the problems that arose myself, avoiding the need for such drastic redesigns.

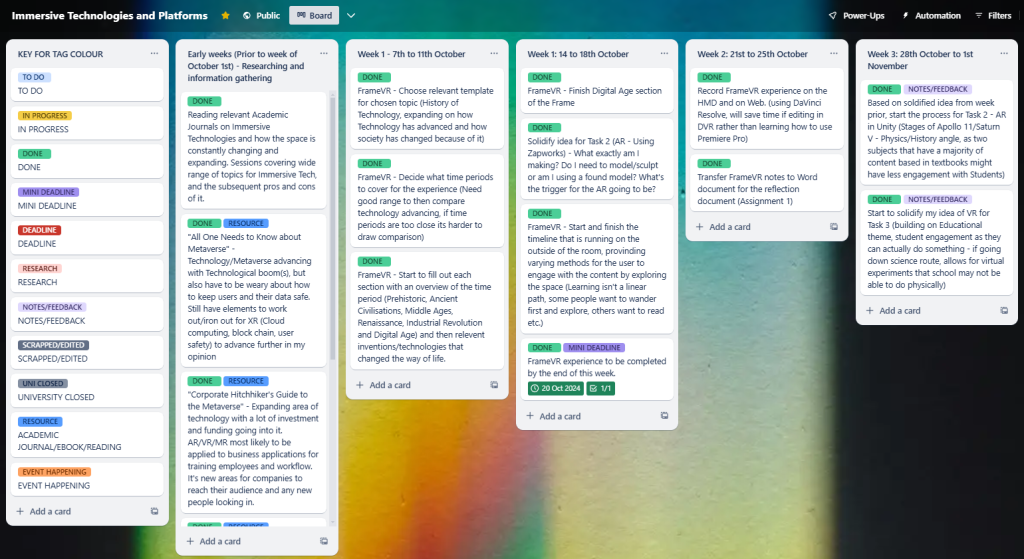

Furthermore, my time management skills were utilised and improved through the duration of this project. To manage my time split across the WebVR project, the Augmented Reality project and this project, I had created a Trello board to organise my workload into a week by week basis. By creating a project management board, it ensured that all of my projects would be completed for their respective deadlines and avoid a time crunch, which would then affect the quality of the work produced for all three tasks.

I believe that on the whole, that this project is a success, yet also believe that this project could potentially be improved with the addition of advanced features and mechanics. As an example, potentially having an AI companion in the classroom to act as a teacher figure within the environment for the user to interact with can enhance the overall experience. With this addition, the experience could then result in further user engagement, with the AI Teacher acting as a method for 1-1 content delivery in comparison to the real world counterpart, which can see a teacher and potentially one member of staff acting as a teaching assistant for a class size of 30 or more students (Henshaw, 2024).

I believe that if this project was expanded on further beyond just an assignment submission, and that other developers, artists and sound engineers were brought onboard, that this could become a resource for mainstream science education.

BIBLIOGRAPHY

Benchmark Products (2021) School science laboratory furniture. Benchmark Products. Available online: https://www.benchmarkproducts.co.uk/products/science/.

Bourguet, M.-L., Wang, X., Ran, Y., Zhou, Z., Zhang, Y. and Romero-Gonzalez, M. (2020) Virtual and Augmented Reality for Teaching Materials Science: a Students as Partners and as Producers Project. [online] Available online: https://www.researchgate.net/publication/346440538_Virtual_and_Augmented_Reality_for_Teaching_Materials_Science_a_Students_as_Partners_and_as_Producers_Project

Brookshire, B. (2020) Study acid-base chemistry with at-home volcanoes. Science News Explores. Available online: https://www.snexplores.org/article/study-acid-base-chemistry-volcanoes-experiment.

Caserman, P., Garcia-Agundez, A., Gámez Zerban, A. and Göbel, S. (2021) Cybersickness in current-generation virtual reality head-mounted displays: systematic review and outlook. Virtual Reality, [online] 25. Available online: https://link.springer.com/article/10.1007/s10055-021-00513-6.

ClassVR (2017) SEND Education. ClassVR. Available online: https://www.classvr.com/virtual-reality-in-education/virtual-augmented-reality-in-send-education/.

Encyclopedia Britannica (2020) Science. In: Encyclopædia Britannica. [online] Available online: https://www.britannica.com/science/science.

Feyerabend, L. (2017) VR’s Motion Sickness Problem — And How To Hack It (With The Help Of Your Cat). Medium. Available online: https://medium.com/dangeroustech/vrs-motion-sickness-problem-and-how-to-hack-it-with-the-help-of-your-cat-a040cf72a7f3.

Hamlyn, B., Brownstein, L., Shepherd, A., Stammers, J. and Lemon, C. (2023) Science Education Tracker 2023. Available online: https://royalsociety.org/-/media/policy/projects/science-education-tracker/science-education-tracker-2023.pdf.

Henshaw, P. (2024) School funding crisis: Larger class sizes, reduced curriculum and deficit budgets – SecEd. SecEd. Available online: https://www.sec-ed.co.uk/content/news/school-funding-crisis-larger-class-sizes-reduced-curriculum-and-deficit-budgets/.

Jamieson, S. (2024) Likert scale. In: Encyclopædia Britannica. [online] Available online: https://www.britannica.com/topic/Likert-Scale.

Peters, E. (2021) Four types of usability tests. Experience UX. Available online: https://www.experienceux.co.uk/ux-blog/four-types-of-usability-tests/.

Pottle, J. (2019) Virtual reality and the transformation of medical education. Future Healthcare Journal, [online] 6(3), 181–185. Available online: https://www.rcpjournals.org/content/futurehosp/6/3/181?fbclid=IwAR19CU54MurxlOyz97FcPkDMQZcNXrme1JMCk2vQTwPi_jxC-9NO5zNDpjw.

Prithul, A., Adhanom, I.B. and Folmer, E. (2021) Teleportation in Virtual Reality; A Mini-Review. Frontiers in Virtual Reality, [online] 2. Available online: https://www.frontiersin.org/journals/virtual-reality/articles/10.3389/frvir.2021.730792/full.

Sala, N. (2021) Virtual Reality, Augmented Reality, and Mixed Reality in Education. Current and Prospective Applications of Virtual Reality in Higher Education, [online] 48–73. Available online: https://www.researchgate.net/publication/351365924_Virtual_Reality_Augmented_Reality_and_Mixed_Reality_in_Education_A_Brief_Overview.

Sullivan, G.M. and Artino, A.R. (2013) Analyzing and Interpreting Data from Likert-Type Scales. Journal of Graduate Medical Education, [online] 5(4), 541–542. Available online: https://pmc.ncbi.nlm.nih.gov/articles/PMC3886444/.

Thompson, S. (2020) Motion Sickness in VR: Why it happens and how to minimise it. virtualspeech.com. Available online: https://virtualspeech.com/blog/motion-sickness-vr.

Tu , F.K. (2020) Smooth locomotion in VR Comparing head orientation and controller orientation locomotion. Blekinge Institute of Technology. Available online: https://www.diva-portal.org/smash/get/diva2:1455644/FULLTEXT02.pdf.

University of Warwick (2014) Volcano. Warwick.ac.uk. Available online: https://warwick.ac.uk/fac/sci/chemistry/outreach/primary/volcano/.

Vatix (2024) 10 Common Laboratory Hazards and How to Control Them – Vatix. Vatix. Available online: https://vatix.com/blog/common-laboratory-hazards/#ktzsrn-1-chemical-hazards.

Voyage Audio (2022) Spatial Audio For VR/AR & 6 Degrees Of Freedom – Voyage Audio. Voyage Audio. Available online: https://voyage.audio/spatial-audio-for-vr-ar-6-degrees-of-freedom/.

Wong, D. (2021) Research guides: Virtual Reality in the Classroom: What is VR? guides.library.utoronto.ca. Available online: https://guides.library.utoronto.ca/c.php?g=607624&p=4938314.

Zhang, C. (2020) Investigation on Motion Sickness in Virtual Reality Environment from the Perspective of User Experience. IEEE Xplore. Available online: https://ieeexplore.ieee.org/abstract/document/9236907.